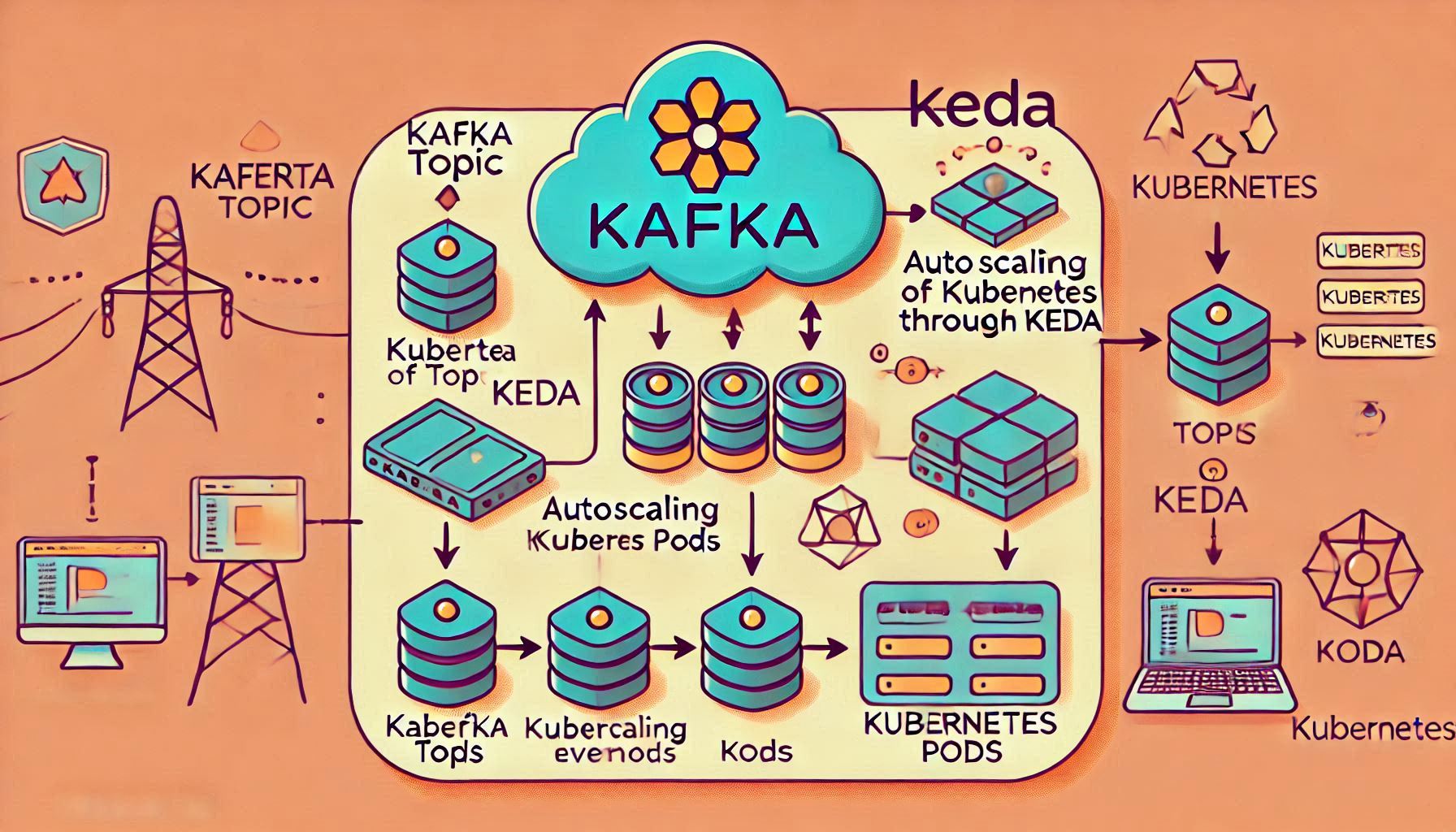

KEDA (Kubernetes Event-Driven Autoscaler) is a powerful tool that allows Kubernetes workloads to scale based on external events. One of its most common use cases is scaling based on Kafka messages using Kafka triggers. In this technical article, we’ll explore how KEDA Kafka triggers work, their configuration, and best practices for implementing them in your Kubernetes environment.

TL;DR

KEDA Kafka triggers enable event-driven autoscaling for Kubernetes workloads by monitoring Kafka topics. When the number of lagging messages exceeds a defined threshold, KEDA automatically adjusts the number of replicas for the workload. Configure Kafka triggers using the ScaledObject resource and set appropriate thresholds to optimize resource usage and performance.

What is KEDA?

KEDA (Kubernetes Event-Driven Autoscaler) extends Kubernetes’ native Horizontal Pod Autoscaler (HPA) by introducing support for event-driven scaling. It monitors external event sources and adjusts the number of replicas in your deployment or StatefulSet based on real-time demand.

Key Features of KEDA:

- Event-Driven Autoscaling: Scale workloads based on external event sources like Kafka, RabbitMQ, or Prometheus metrics.

- Lightweight and Non-Intrusive: KEDA operates as an external controller without modifying your existing workloads.

- Multi-Scaler Support: KEDA can handle multiple triggers for a single workload.

- Built-in Scaling Policies: KEDA integrates perfectly with Kubernetes scaling policies.

How Do Kafka Triggers Work?

KEDA Kafka triggers monitor Kafka topics for incoming messages. When the message count or lag in the topic exceeds a defined threshold, KEDA scales the associated workload to handle the increased load. Once the message count drops, KEDA scales down the workload to save resources.

Key Components:

- Kafka Topic: The source of messages that triggers scaling.

- ScaledObject: A custom Kubernetes resource defining the scaling behavior.

- Trigger Authentication: Specifies the credentials for accessing the Kafka cluster.

Configuring Kafka Triggers in KEDA

Prerequisites

- Kafka Cluster: Ensure you have a running Kafka cluster with accessible topics.

- Kubernetes Cluster: A functional Kubernetes cluster with KEDA installed. Follow the KEDA installation guide.

- Deployment or StatefulSet: Define the workload you want to scale.

Example Configuration

Here’s an example of a ScaledObject configuration for a Kafka trigger:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: kafka-scaledobject

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: socketdaddy-kafka-consumer

triggers:

- type: kafka

metadata:

bootstrapServers: "kafka-broker:9092"

topic: "my-topic"

consumerGroup: "my-consumer-group"

lagThreshold: "10"

authenticationRef:

name: kafka-trigger-authTrigger Authentication

Create a TriggerAuthentication resource to define credentials for the Kafka broker:

apiVersion: keda.sh/v1alpha1

kind: TriggerAuthentication

metadata:

name: kafka-trigger-auth

namespace: default

spec:

secretTargetRef:

- parameter: "username"

name: "kafka-secret"

key: "username"

- parameter: "password"

name: "kafka-secret"

key: "password"Kubernetes Secret

Create the Kubernetes secret for Kafka credentials:

apiVersion: v1

kind: Secret

metadata:

name: kafka-secret

namespace: default

type: Opaque

data:

username: <base64-encoded-username>

password: <base64-encoded-password>Best Practices for KEDA Kafka Triggers

- Monitor Kafka Metrics

- Use Kafka monitoring tools like Prometheus and Grafana to track message rates and lag.

- Set Reasonable Thresholds

- Choose lag thresholds that align with your application’s performance requirements and SLA.

- Implement Dead Letter Queues (DLQs)

- Configure DLQs for messages that cannot be processed to avoid losing critical data.

- Secure Kafka Connections

- Use SSL/TLS for Kafka connections and secure credentials with Kubernetes Secrets.

- Test Scaling Scenarios

- Simulate high message rates in your Kafka topics to test autoscaling behavior.

Known Issues and Limitations

- Trigger Lag: Autoscaling may not respond instantly, leading to brief delays during high loads.

- Resource Constraints: Ensure your cluster has sufficient resources to handle scale-ups.

- Multiple Triggers: Conflicting triggers can lead to unpredictable scaling behavior.

External References

- KEDA Kafka Trigger Documentation

- KEDA Official GitHub Repository

- Kafka Concepts and Basics

- Prometheus Monitoring for Kafka

Conclusion

KEDA Kafka triggers are a robust solution for scaling Kubernetes workloads based on Kafka events. By implementing event-driven autoscaling, you can optimize resource utilization, improve performance, and reduce costs. Follow the best practices outlined in this guide to ensure a smooth integration of KEDA Kafka triggers into your applications.