Kubernetes cluster administrators often need control over storage lifecycle. Reclaim policy determines what happens to a PersistentVolume after its PersistentVolumeClaim is deleted. This article shows how to change reclaim policy from Delete to Retain or Recycle. It covers volume types, provisioning, CSI, snapshots, access modes, quotas, security contexts, and event-driven use cases.

TL;DR

- Reclaim policy controls PV cleanup on release.

- Default policies: Delete, Retain; Recycle is deprecated.

- Use kubectl patch or edit to update

reclaimPolicy. - CSI drivers can define reclaim behavior.

- Retain helps audit data before manual deletion; Delete automates cleanup.

- Event-driven workflows can trigger snapshots or cleanup via operators.

Understanding Change Reclaim Policy

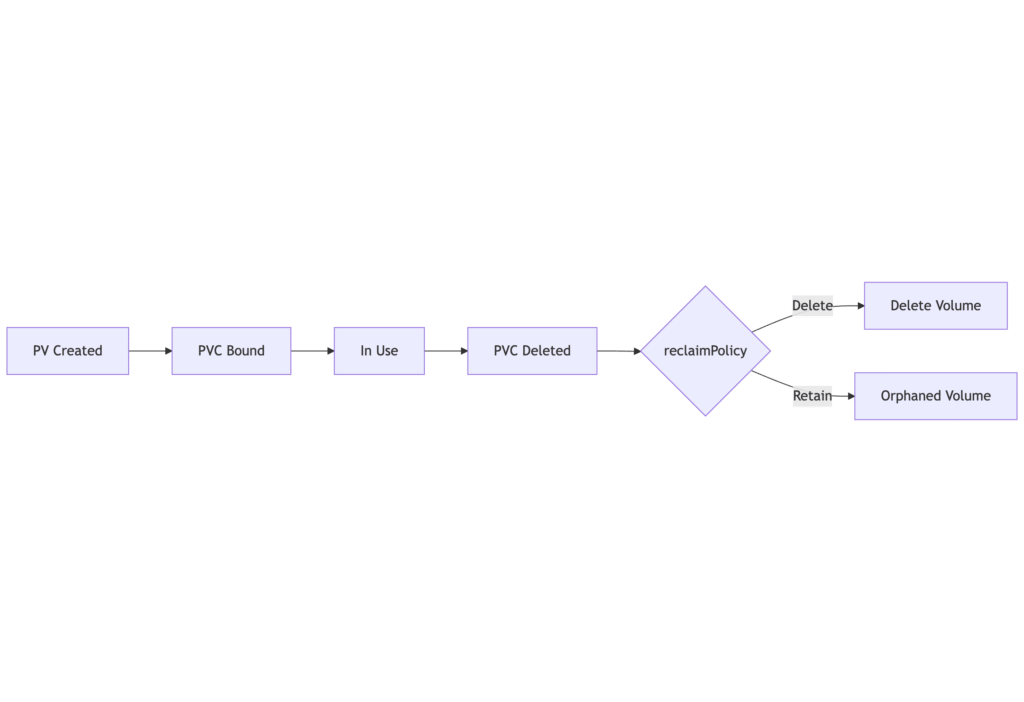

PersistentVolumes (PVs) represent durable storage in Kubernetes. Each PV has a reclaimPolicy field. This field tells the control plane what to do when a user deletes the PersistentVolumeClaim (PVC). Two policies remain in active use: Delete and Retain. The deprecated Recycle policy offered simple scrub via rm -rf /thevolume/*, but only for NFS.

Change Reclaim Policy Steps

You can change reclaimPolicy on a live PV if it is in Available state or Released state. Use either kubectl patch or kubectl edit.

Using kubectl patch

kubectl patch pv my-pv --patch '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'Using kubectl edit

kubectl edit pv my-pv

# change spec.persistentVolumeReclaimPolicy: Delete to RetainReclaim Policy Lifecycle

PVC binding and PV lifecycle follow this sequence:

Change Reclaim Policy Use Cases

Administrators choose policies based on compliance, cost, and security:

- Backup before deletion: Set Retain. Trigger snapshot pipeline via event hook.

- Auto cleanup: Use Delete with dynamic provisioning by StorageClass.

- Manual audit: Orphaned volumes remain until manual scrub.

Volume Types and Provisioning

Kubernetes supports multiple volume plugins. Static provisioning uses admin-defined PV YAML. Dynamic provisioning relies on StorageClass.

HostPath and NFS (Static)

apiVersion: v1

kind: PersistentVolume

metadata:

name: static-nfs-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /exports/data

server: nfs.example.com

AWS EBS, GCE PD, Azure Disk (Dynamic)

StorageClass example:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: gp2-delete

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumerCSI Drivers and Reclaim Policy

CSI drivers implement CreateVolume, DeleteVolume, and snapshot APIs. They respect reclaimPolicy defined by StorageClass. Drivers may support additional volume expansion and snapshot features.

Capacity, Snapshots, and Clones

You can snapshot a PVC using VolumeSnapshot API. Snapshots follow PVC lifecycle, not PV. After taking snapshot, you can delete PVC and reclaim PV based on policy without losing snapshot. Cloning from snapshot uses new PVC and StorageClass clone support.

Access Modes and Security Contexts

PVs support access modes:

- ReadWriteOnce (RWO)

- ReadOnlyMany (ROX)

- ReadWriteMany (RWX)

SecurityContext on Pod controls FSGroup and SELinux labels. PVC volume mounts inherit these settings. Quotas on PVCs enforce storage limits per namespace.

Quotas and Resource Limits

Define ResourceQuota to limit total PVC storage in a namespace:

apiVersion: v1

kind: ResourceQuota

metadata:

name: storage-quota

spec:

hard:

requests.storage: 100Gi

References

- PersistentVolume Reclaiming

- Change PV Reclaim Policy

- kubectl patch

- Container Storage Interface (CSI)

- Reclaim Policy Details

- CSI Driver Docs

- VolumeSnapshot API

- Resource Quotas

Suggested Reading

PostHashID: f0f1f90b49c7703288250749fba90abbe846f4ea700ea4240384fb88a4767119