Scheduling pods to specific nodes ensures performance, compliance, and resource isolation. Kubernetes offers nodeName, nodeSelector, nodeAffinity, taints, and tolerations for precise Pod Node Assignment. You can also integrate persistent storage, CSI drivers, quotas, security contexts, and event-driven workflows for robust deployments.

TL;DR

- Use

nodeNamefor hard scheduling to a specific node. - Apply

nodeSelectorornodeAffinityfor label-based Pod Node Assignment. - Leverage taints and tolerations to isolate workloads and enforce node reservations.

- Define

PersistentVolumeClaims,StorageClasses, and CSI for dynamic storage provisioning. - Implement resource quotas, security contexts, and access modes for multi-tenant clusters.

- Combine scheduling and storage in event-driven flows using initContainers and sidecars.

Pod Node Assignment Overview

Kubernetes schedules pods based on available resources and constraints. By default, the scheduler spreads pods evenly. To override, you specify nodeName, nodeSelector, or nodeAffinity in the Pod spec. You can also use taints and tolerations to repel or admit pods.

Pod Node Assignment Strategies

Three primary strategies exist:

- Direct binding via

nodeName. - Label-based selection with

nodeSelectorornodeAffinity. - Policy-driven using taints and tolerations.

Pod Node Assignment with nodeSelector

nodeSelector filters nodes by labels. It offers a simple key-value match.

apiVersion: v1

kind: Pod

metadata:

name: web-pod

spec:

nodeSelector:

disktype: ssd

containers:

- name: web

image: nginx

Above, Kubernetes schedules web-pod on nodes labeled disktype=ssd. If no matching node exists, the pod remains Pending.

Pod Node Assignment with Node Affinity

nodeAffinity provides advanced rules: required or preferred. You can match operators like In, NotIn, Exists.

apiVersion: v1

kind: Pod

metadata:

name: db-pod

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: "zone"

operator: In

values:

- "us-east-1a"

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: "gpu"

operator: Exists

containers:

- name: db

image: mysql

This schedules db-pod on nodes in us-east-1a, preferring nodes with GPU labels.

Pod Node Assignment with Taints and Tolerations

Taints repel pods; tolerations admit them. Label a node to repel generic pods.

# Taint a node

kubectl taint nodes node01 key=reserved:NoSchedule# Pod toleration

apiVersion: v1

kind: Pod

metadata:

name: reserved-pod

spec:

tolerations:

- key: "key"

operator: "Equal"

value: "reserved"

effect: "NoSchedule"

containers:

- name: app

image: alpinereserved-pod runs on node01 despite the taint. Other pods lack the toleration and cannot schedule there.

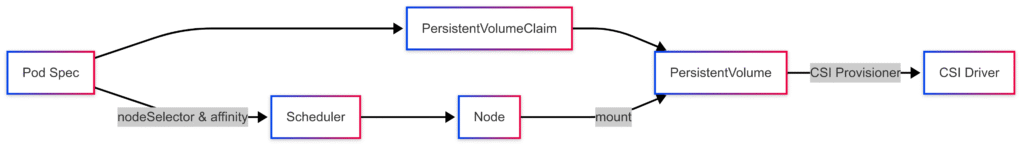

Scheduling and Storage Flow

Volume Types for Pods

Kubernetes supports multiple volume types: hostPath, emptyDir, configMap, secret, persistentVolumeClaim, and CSI volumes. Use persistentVolumeClaim for durable storage.

Storage Lifecycle and CSI

Storage lifecycle involves provisioning, binding, using, and reclaiming volumes. Dynamic provisioning uses StorageClasses and CSI drivers to create PVs on demand.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: fast-ssd

provisioner: csi.example.com

parameters:

type: pd-ssd

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

A StorageClass named fast-ssd uses a CSI plugin. Reclaim policy Delete removes PV after PVC deletion.

PersistentVolumeClaim and Access Modes

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: fast-ssd

ReadWriteOnce allows single-node mount. Other modes: ReadOnlyMany, ReadWriteMany.

Capacity, Snapshots, and Reclaim Policy

PVCs request capacity; PVs define actual size. Snapshots capture data state via VolumeSnapshot resources. Reclaim policies control PV cleanup.

Resource Quotas and Security Contexts

Use ResourceQuota to limit PVC counts and storage consumption. Apply securityContext in Pod spec to enforce fsGroup, runAsUser, and SELinux labels:

spec:

securityContext:

fsGroup: 2000

runAsUser: 1000

This ensures volume permissions align with Pod requirements.

Pod Node Assignment Use-Cases

Common scenarios:

- High-performance workloads on GPU nodes.

- Data residency compliance by zone.

- Isolation of critical services via taints.

- Event-driven flow: initContainer fetches model from object store, main container processes data and writes to PVC.

Event-Driven Pod Node Assignment

Combine scheduling with sidecars for event-driven pipelines. Example: a pod triggers on Kafka events, uses initContainer to prepare environment, then binds to SSD nodes for low-latency processing.

References

Suggested Reading

PostHashID: dff206c11dc8afc3024da98fe4f745836e756413f89bf3414a97a5a509e6a2bf