If you’ve been working with Apache Kafka, you’ve likely heard of the Kafka Idempotent Producer. But what does it really mean, and why is it important? In simple terms, it ensures that messages are delivered exactly once to a topic, even if something goes wrong during the process. This article dives into what the Kafka Idempotent Producer is, how it works, and why it’s a game-changer for anyone building reliable, distributed systems.

TL;DR

The Kafka Idempotent Producer eliminates duplicate messages during retries, ensuring exactly-once delivery guarantees within a Kafka partition. By setting enable.idempotence=true, Kafka automatically handles message retries without duplicates.

Starting with Kafka 3.0, producer have enable.idempotence=true and acks=all by default. See KIP-679 for more details.

Why Do We Need an Idempotent Producer?

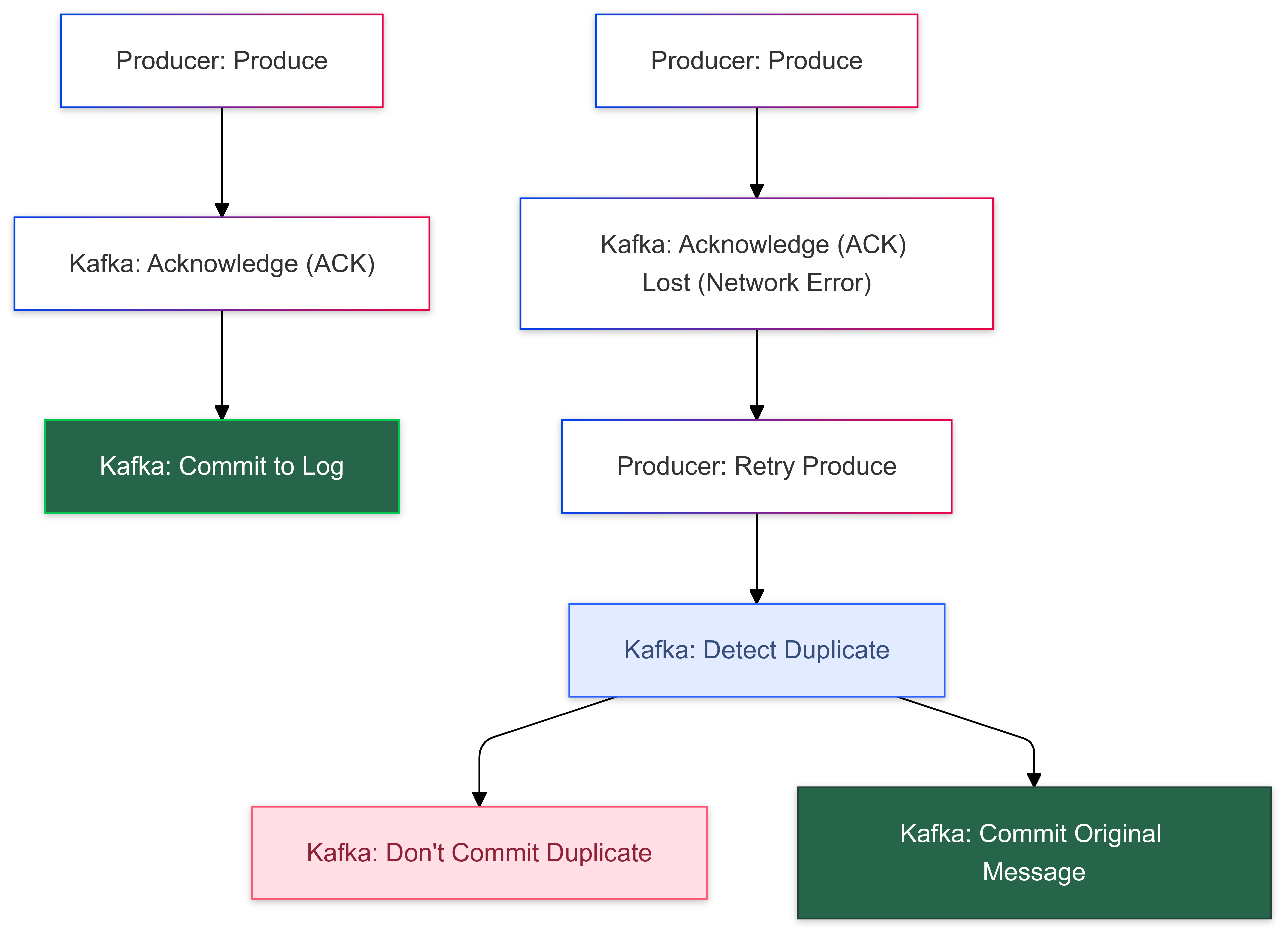

Kafka producers are responsible for sending messages to Kafka topics. But here’s the problem: in distributed systems, things can go wrong. A network glitch, a failed broker, or even a producer crash can result in the producer retrying to send a message. Without an idempotent producer, this could lead to duplicates — not ideal if you’re working with financial transactions or other critical systems.

The Kafka Idempotent Producer solves this by making retries safe and ensuring each message is written exactly once, no matter how many times the producer tries to send it.

How the Kafka Idempotent Producer Works

- Producer IDs and Sequence Numbers

When you enable idempotence, Kafka assigns each producer a unique Producer-ID (PID). Each message sent by the producer gets a sequence number. Kafka uses this sequence number to detect duplicates and ensure that only the latest message is accepted. - Message Deduplication at the Broker

The broker keeps track of the sequence numbers for each partition. If a message with a lower or duplicate sequence number arrives, the broker discards it, ensuring no duplicates are stored. - Acknowledgments and Retries

The producer waits for an acknowledgment (ACK) from the broker before considering a message successfully sent. If the ACK isn’t received due to a network issue or timeout, the producer retries, and Kafka ensures no duplicates are written. - Safe Defaults

Enabling idempotence also sets other producer properties to safe defaults, such as:acks=all: Ensures the leader and all in-sync replicas confirm receipt.retries=Integer.MAX_VALUE: Allows unlimited retries.max.in.flight.requests.per.connection=5: Prevents out-of-order messages.

Starting with Kafka 3.0, producer have enable.idempotence=true and acks=all by default. See KIP-679 for more details.

Enabling Kafka Idempotent Producer

Setting up an idempotent producer is straightforward. You only need to enable one configuration:

enable.idempotence=true Here’s an example in Java:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("enable.idempotence", "true");

KafkaProducer<String, String> producer = new KafkaProducer<>(props);And that’s it! Once enabled, Kafka takes care of the rest.

Common Scenarios Where It Comes In Handy

- Financial Transactions

Imagine you’re processing payments. A duplicate transaction could mean charging a customer twice — not good! The idempotent producer guarantees the transaction is only recorded once. - Event Deduplication

In event-driven architectures, deduplication at the application level can be messy. The idempotent producer shifts this responsibility to Kafka, simplifying your code. - Data Pipelines

When building data pipelines, you want your data to flow smoothly without worrying about duplicates during retries. The idempotent producer ensures clean data delivery.

Limitations of the Kafka Idempotent Producer

While it’s powerful, the idempotent producer does have its boundaries:

- Partition-Specific

The idempotent producer guarantees exactly-once delivery within a single partition. For multi-partition use cases, you’ll need transactions to extend this guarantee across partitions. - Resource Overhead

Enabling idempotence adds some overhead to track sequence numbers and manage retries, which could slightly impact performance. - Not a Silver Bullet

It doesn’t replace proper application-level deduplication for all cases. For example, external systems consuming Kafka data might still need deduplication logic.

Idempotent Producer vs. Transactions

People often confuse the idempotent producer with Kafka transactions. While they’re related, they’re not the same thing:

- Idempotent Producer: Guarantees exactly-once delivery within a single partition.

- Transactions: Extend exactly-once guarantees across multiple partitions and topics, ensuring atomic writes.

For instance, if you’re writing to multiple topics in a distributed system, you’ll need transactions to ensure atomicity.

Best Practices for Using Kafka Idempotent Producer

- Enable Idempotence by Default

Unless you have a specific reason not to, enabling idempotence is a no-brainer for most applications. Starting with Kafka 3.0, producer haveenable.idempotence=trueandacks=allby default. See KIP-679 for more details. - Monitor Broker Metrics

Use tools like JMX to monitor metrics related to sequence numbers and retries, ensuring the producer and broker are in sync. - Combine with Transactions When Necessary

For multi-partition use cases, consider combining idempotence with Kafka transactions to achieve end-to-end exactly-once semantics. - Test in Real-World Scenarios

Simulate failures like broker crashes or network issues to ensure your producer behaves as expected under different conditions.

References

- Transactions in Apache Kafka

- Apache Kafka Official Documentation: Idempotent Producer

- Learn Conduktor: Kafka Idempotent Producer

- Apache Kafka Wiki: Idempotent Producer